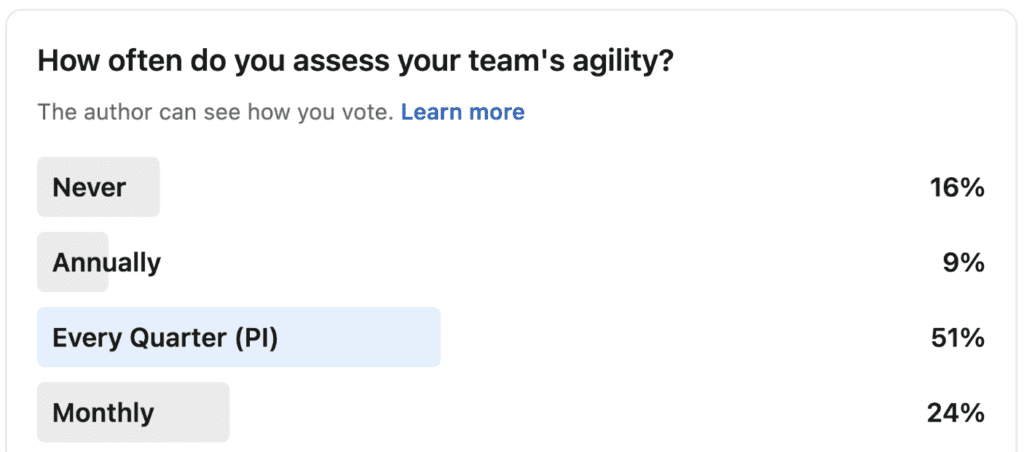

Before writing this article, we were curious to know more about how often teams are measuring their agility (if ever). We ran an informal poll on LinkedIn, and the results were fascinating.

Assessing your team’s agility is a crucial step toward continuous improvement. After all, you can’t get where you want to go if you don’t know where you are.

But you probably have questions: How do you measure a team’s agility? Who should do it and when? What happens with the data you collect, and what should you do afterwards?

We’re here to answer these questions. Use the following sections to guide you:

- What is Team and Technical Agility?

- What is the team and technical agility assessment?

- Assessment tips, including before, during, and after you assess

- Team and technical agility assessment resources

These sections include a video showing where to find the team and technical agility assessment in SAFe® Studio and what the assessment looks like.

What Is Team and Technical Agility?

Before jumping into the assessment, it’s important to understand team and technical agility. This will help determine if you want to run the assessment and which areas may be most beneficial for your team.

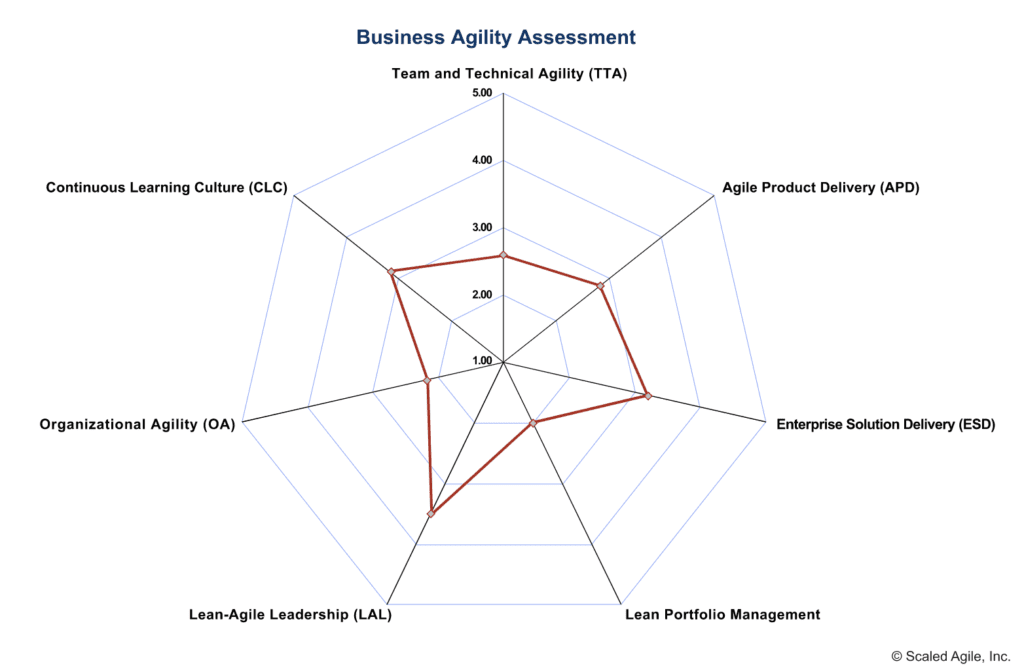

Team and technical agility is a team’s ability to deliver solutions that meet customers’ needs. It’s one of the seven business agility core competencies.

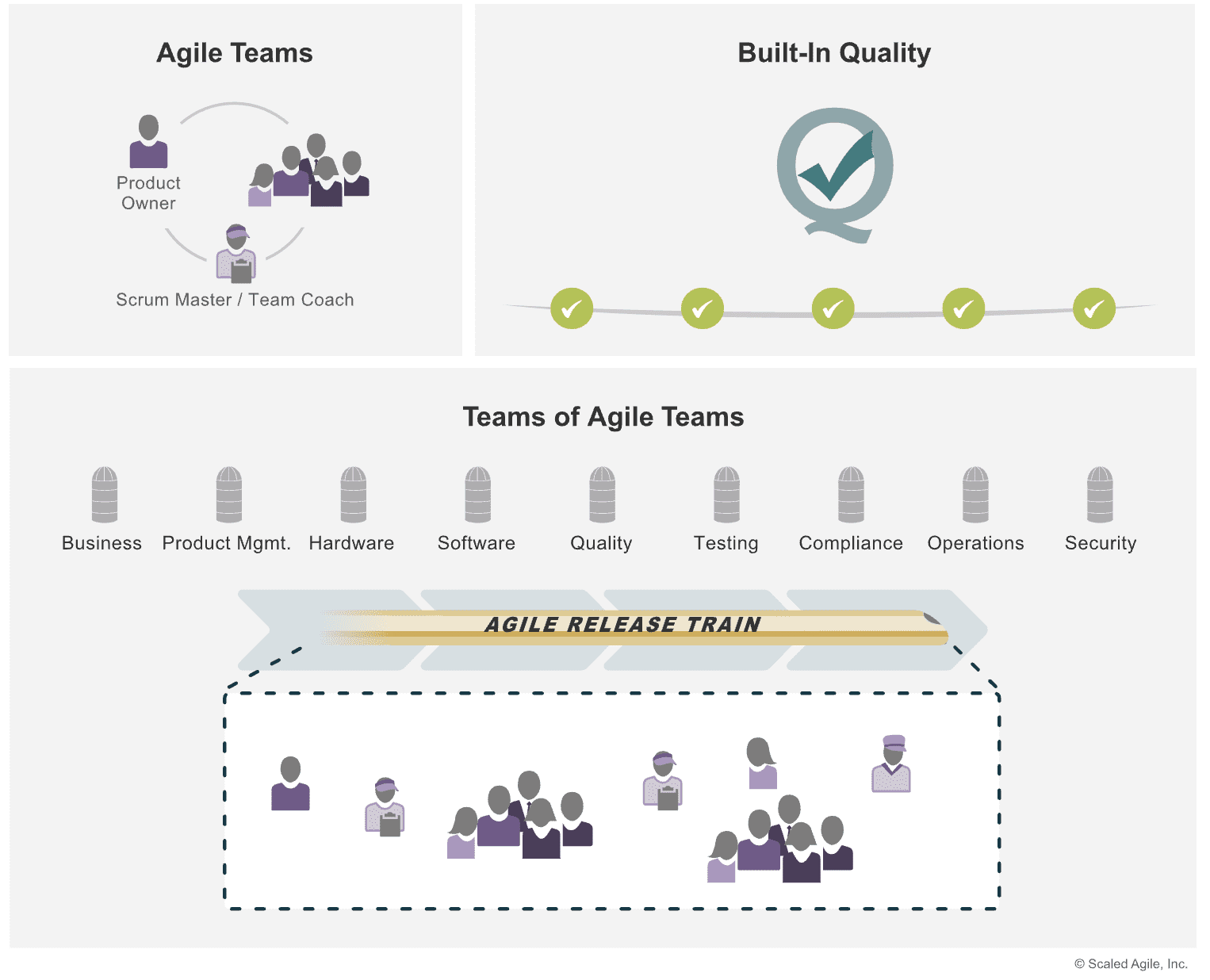

Team and technical agility contains three parts:

- Agile teams

- Teams of Agile teams

- Built-in Quality

Agile teams

As the basic building block of an Agile Release Train (ART), the Agile team is responsible for

- Connecting with the customer

- Planning the work

- Delivering value

- Getting feedback

- Improving relentlessly

They’re the ones on the ground bringing the product roadmap to life. They must also plan, commit, and improve together to execute in unison.

Teams of Agile teams

An ART is where Agile teams work together to deliver solutions. The ART has the same responsibilities as the Agile team but on a larger scale. The ART also plans, commits, executes, and improves together.

Built-in quality

Since Agile teams and ARTs are responsible for building products and delivering value, they must follow built-in quality practices. These practices apply during development and the review process.

As we state in the Framework article: “Built-in quality is even more critical for large solutions, as the cumulative effect of even minor defects and wrong assumptions may create unacceptable consequences.”

It’s important to consider all three areas when assessing your team’s agility.

What Is the Team and Technical Agility Assessment?

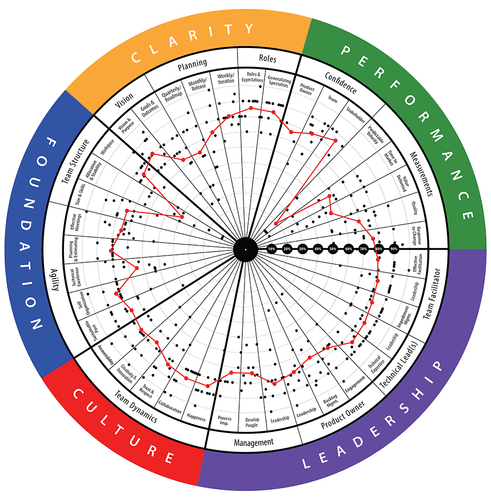

The team and technical agility assessment is a review tool that measures your team’s agility through a comprehensive survey and set of recommendations.

However, there’s more to it than that. We’ll review the information you need to fully understand what you learn from this assessment and how to access it.

Each question in the assessment asks team members to rate statements about their teams on the following scale:

- True

- More True than False

- Neither False nor True

- More False than True

- False

- Not Applicable

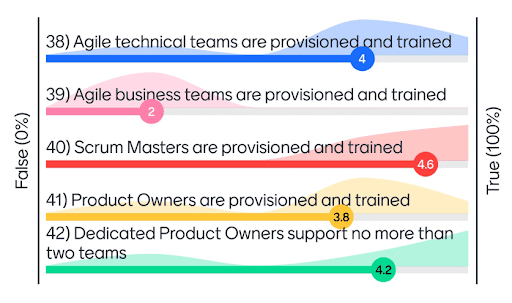

What information can I get from the team and technical agility assessment?

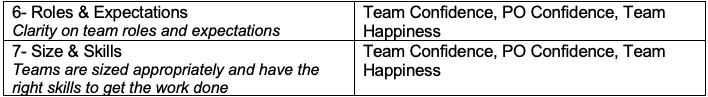

Team and technical agility assessment helps teams identify areas for improvement, highlight strengths worth celebrating, and benchmark performance against future progress. It asks questions like the following about how your team operates:

- Do team members have cross-functional skills?

- Do you have a dedicated Product Owner (PO)?

- How are teams of teams organized in your ARTs?

- Do you use technical practices like test-driven development and peer review?

- How does your team tackle technical debt?

For facilitators, including Scrum Masters/Team Coaches (SM/TC), the team and technical agility assessment is a great way to create space for team reflection beyond a typical retrospective. It can also increase engagement and buy-in for the team to take on actionable improvement items.

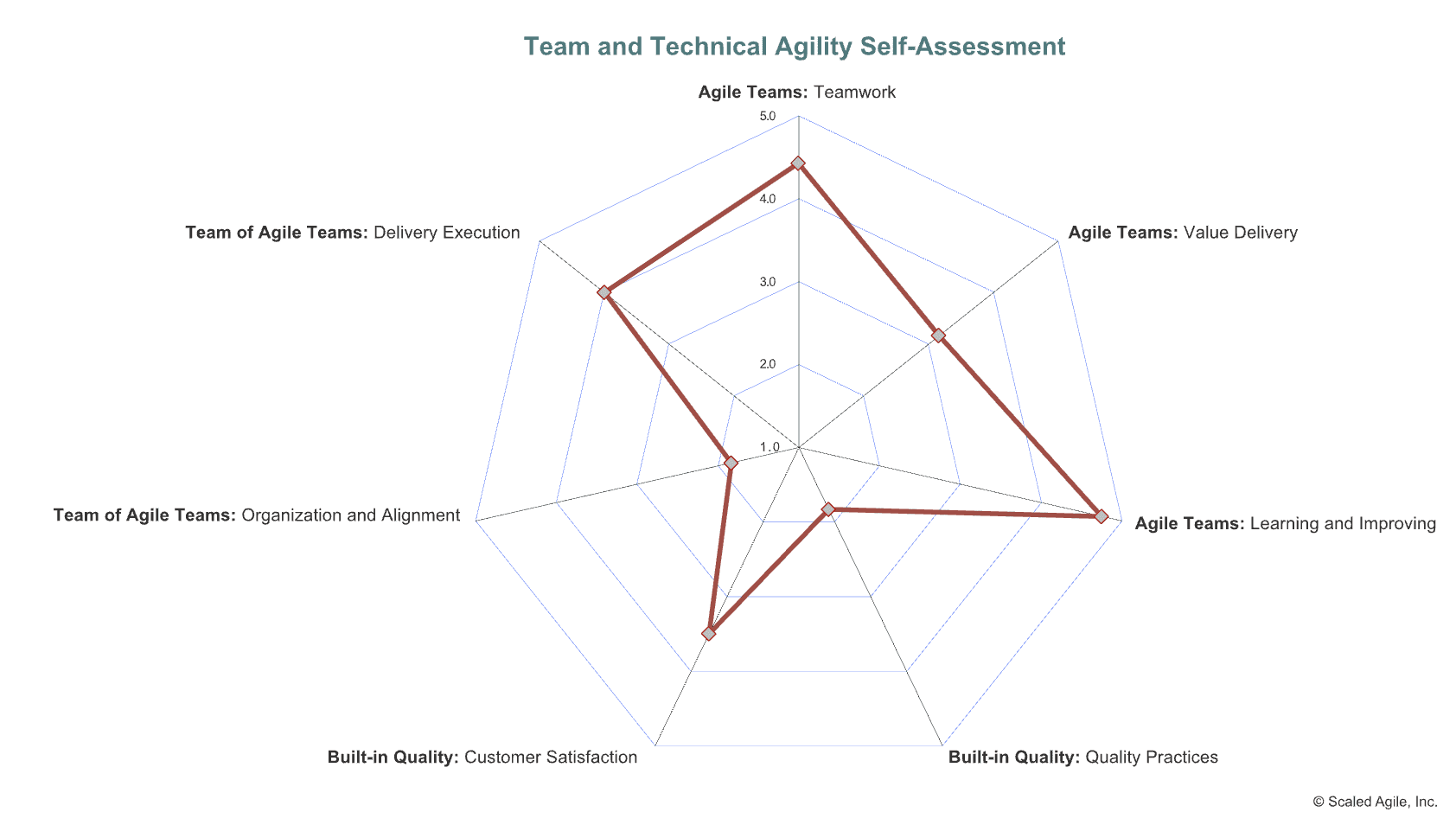

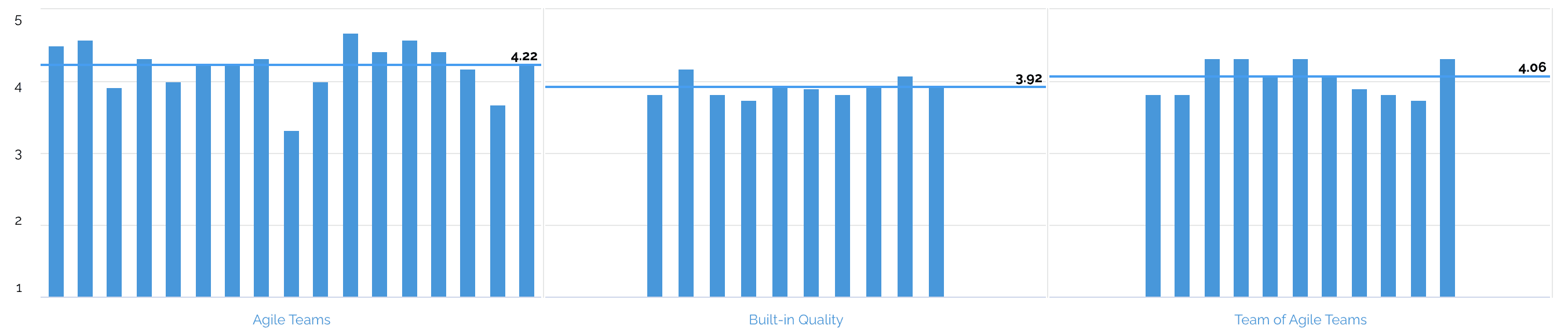

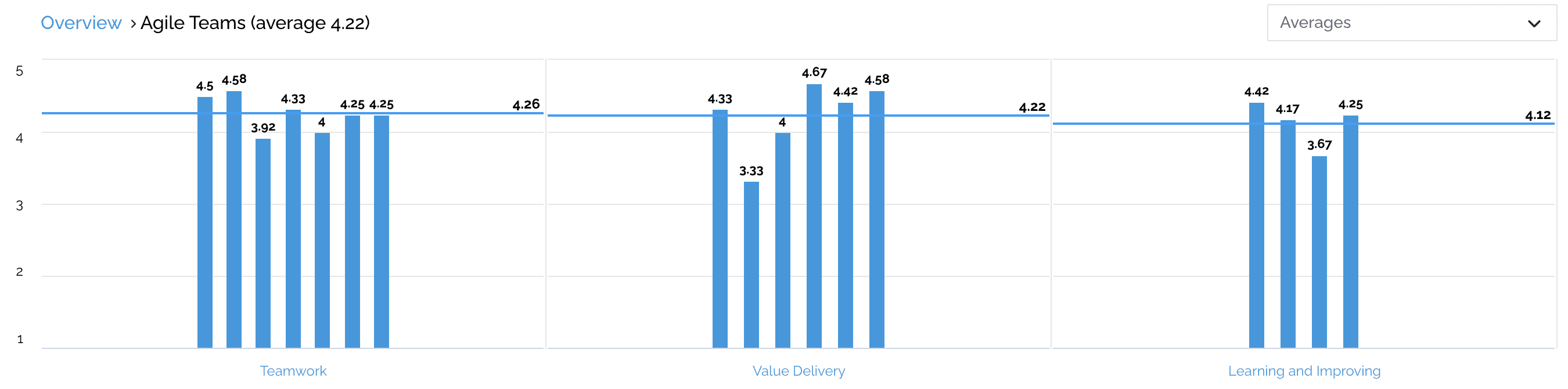

Once the assessment is complete, the team receives the results broken down by each category of team and technical agility.

When you click on a category, the results break into three sub-categories to drill down even further into the responses.

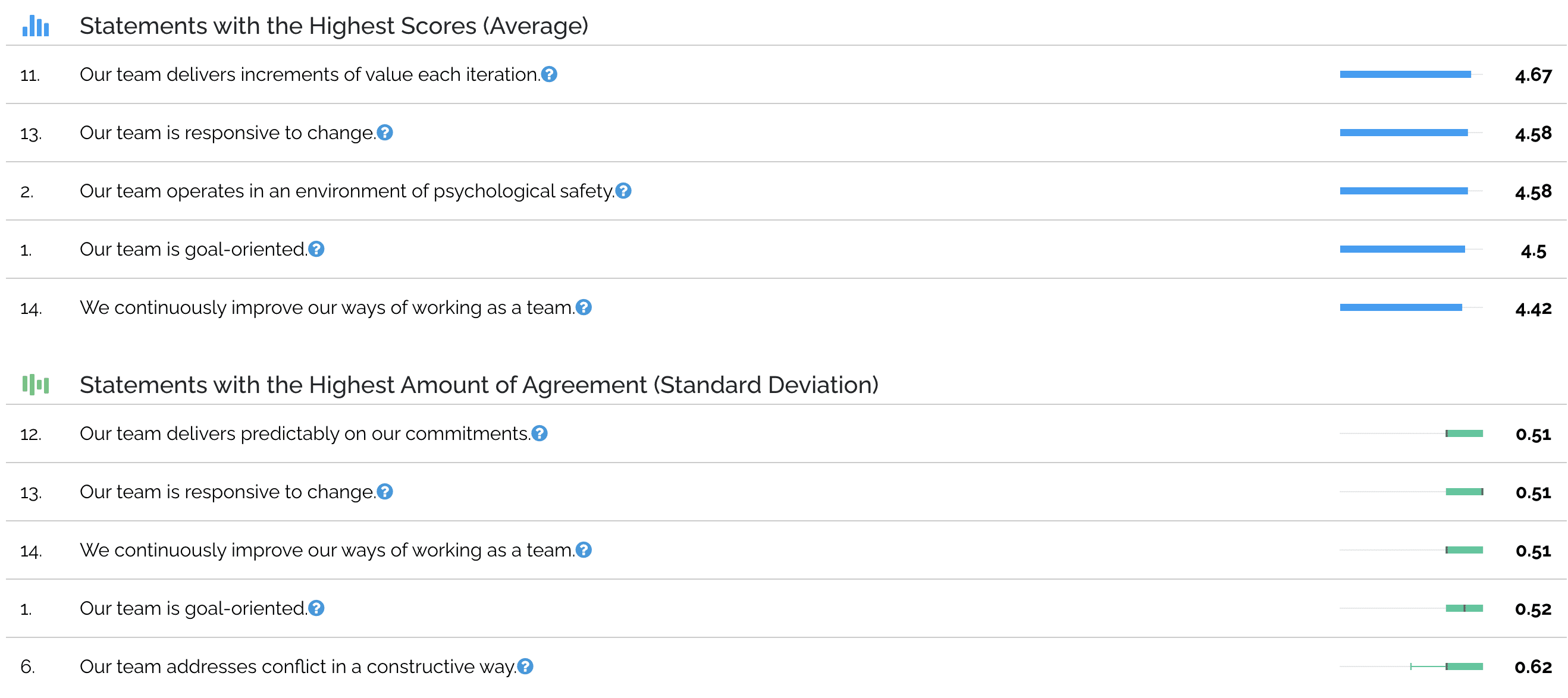

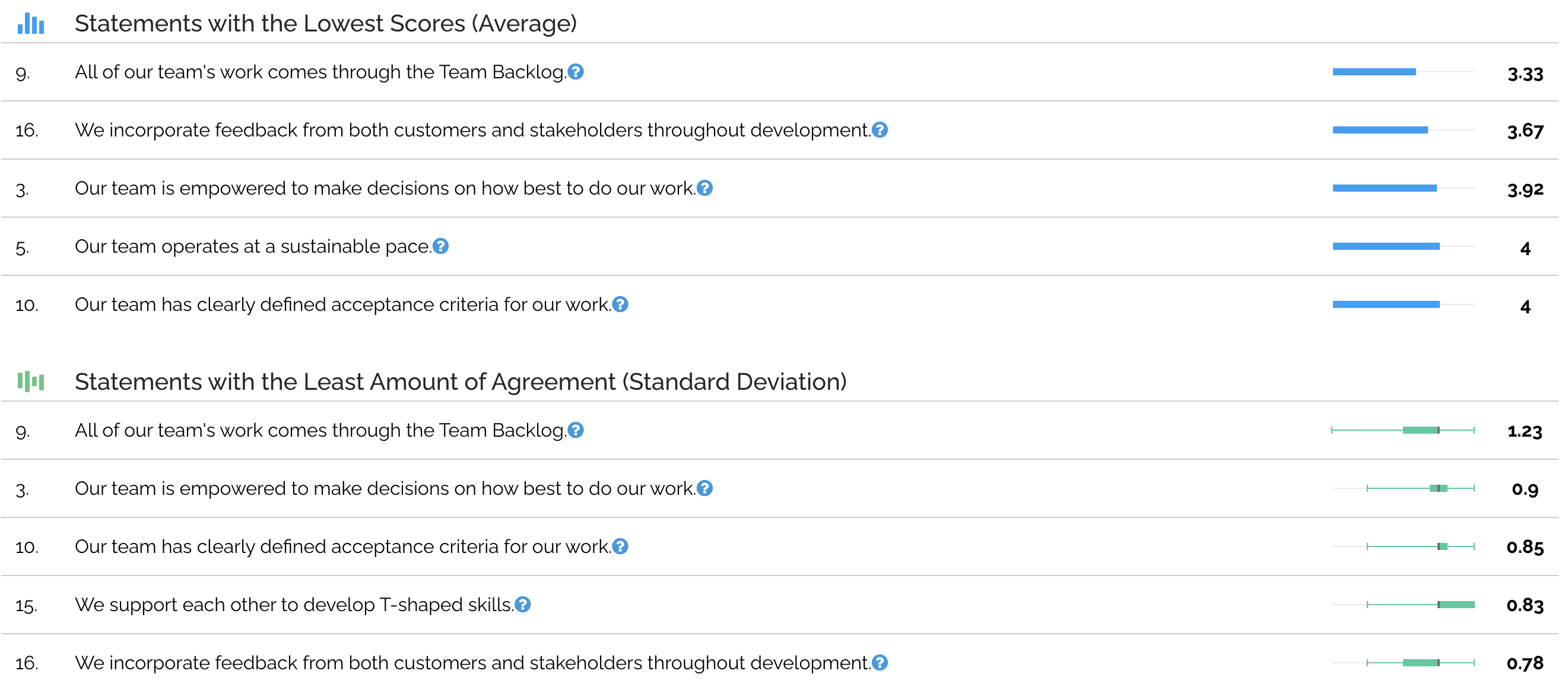

In addition to the responses, you receive key strengths. The answers with the highest average scores and the lowest deviations between team members are key strengths.

Inversely, you also get key opportunities. The answers with the lowest average scores and highest deviations between team members highlight areas where more focus is needed.

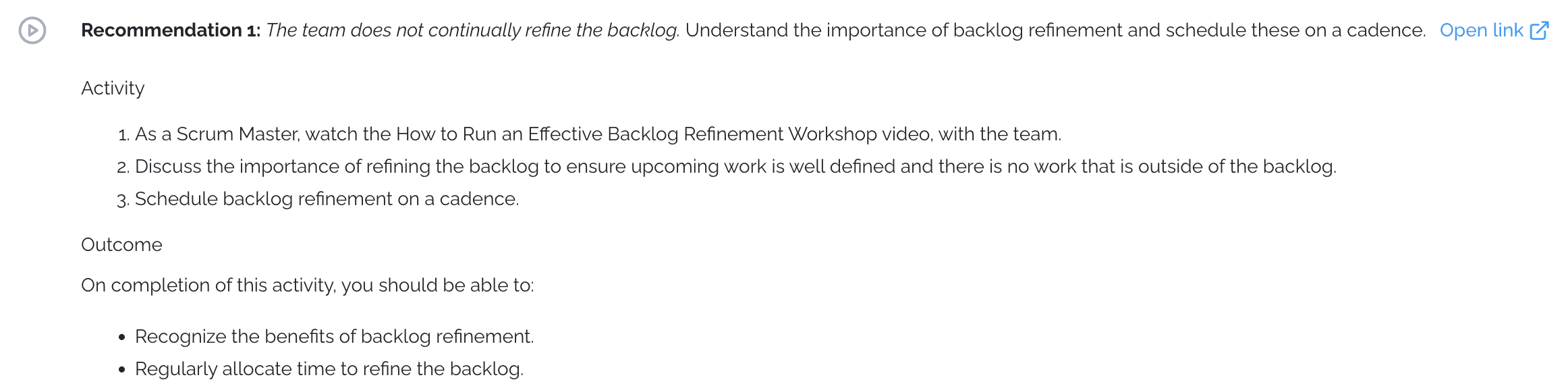

The assessment will include growth recommendations based on your team’s results. These are suggested next steps for your team to improve the statements and areas where it scored lowest.

How do I access the team and technical agility assessment?

You can access the team and technical agility assessment in SAFe® Studio. Use the following steps:

- Log into SAFe® Studio.

- Navigate to the My SAFe Assessments page under “Practice” in the main navigation bar on the left side of the homepage.

- Click the Learn More button under Comparative Agility, our Measure and Grow Partner. The team and technical agility assessment runs through their platform.

- Click on the Click Here to Get Started button.

- From there, you’ll land on the Comparative Agility website. If you want to create an account to save your progress and assessment data, you may do so. If you’d like to skip to the assessment, click on Start Survey in the bottom right of the screen.

- Select Team and Technical Agility Assessment.

- Click Continue in the pop-up that appears.

- The assessment will then start in a new tab.

See each of these steps in action in this video.

Team and Technical Agility Assessment Best Practices

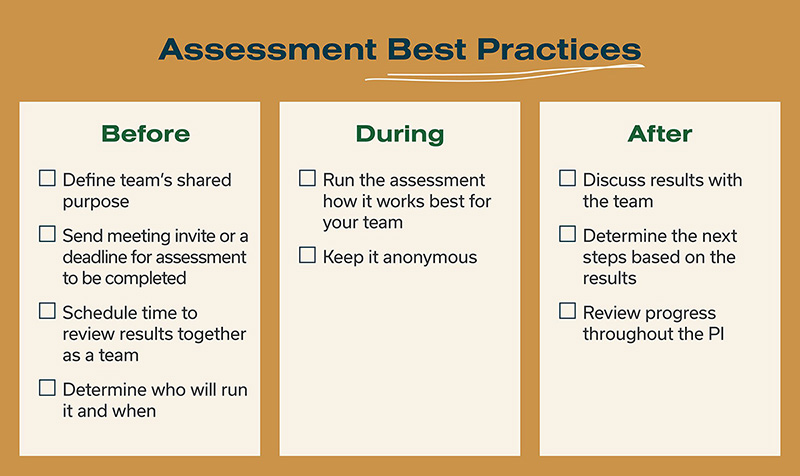

To ensure you get the best results from the team and technical agility assessment, we’ve compiled recommended actions before, during, and after the assessment.

Before facilitating the team and technical agility assessment

Being intentional about how you set up the assessment with your team will give you results you can work with after the assessment.

Who should run the assessment

Running assessments can be tricky for a few reasons.

- Teams might feel defensive about being “measured”

- Self-reported data isn’t always objective or accurate

- Emotions and framing can impact the results

That’s why SAFe recommends a SM/TC or other trained facilitator run the assessment. A SM/TC, SPC, or Agile coach can help ensure teams understand their performance and know where to focus their improvement efforts.

When to run the assessment

It’s never too early or too late to know where you stand. Running the assessment for your team when starting with an Agile transformation will help you target the areas where you most need to improve, but you can assess team performance anytime.

As for how frequently you should run it, it’s probably more valuable to do it on a cadence—either once a PI or once a year, depending on the team’s goals and interests. There’s a lot of motivation in seeing how you grow and progress as a team, and it’s easier to celebrate wins demonstrated through documented change over time.

How to prepare to run the assessment

Before you start the team and technical agility assessment, define your team’s shared purpose. This will help you generate buy-in and excitement. If the team feels like they’re just completing the assessment because the SM/TC said so, it won’t be successful. They must see value in it for them as individuals and as a team.

Some questions we like to ask to set this purpose include:

- What do we want it to feel like to be part of this team two PIs from now?

- How will our work lives be improved when we check in one year from now?

We like to kick off the assessment with a meeting invitation with a draft agenda if you’re completing the assessment as a team. Sending this ahead of time gives everyone a chance to prepare. You can keep the agenda loose, so you have the flexibility to spend more or less time discussing particular areas, depending on how your team chooses to engage with each question.

If you’re completing the assessment asynchronously, send out a deadline of when team members must complete the assessment by. Then send a meeting invitation for reviewing the results as a team.

Facilitating the team and technical agility assessment

Now it’s time to complete the assessment. These are a couple of tips to consider when facilitating the assessment for your team.

Running the assessment

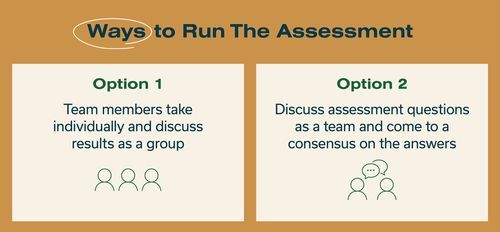

There are two ways you can approach running this assessment. Each has a different value. Choose the option based on your team’s culture.

Option one is to have team members take the assessment individually and then discuss the results as a group. You can do this one of two ways: team members complete the assessment asynchronously by a certain date so you can review results as a team later or set a time for teammates to take the assessment at the same time and discuss results immediately afterwards.

Option two is to discuss the assessment questions as a team and agree on the group’s answers.

When we ran this assessment, we had team members do it individually so we could focus our time together on reviews and actions. If you run it asynchronously, be available to team members if they have questions before you review your answers.

Keeping the assessment anonymous

Keeping the answers anonymous is helpful if you want more accurate results. We like to be clear upfront that the assessment will be anonymous so that team members can feel confident about being honest in their answers.

For example, with our teams, we not only explained the confidentiality of individuals’ answers but also demonstrated in real time how the tool works so that the process would feel open and transparent. We also clarified that we would not be using the data to compare teams to each other or for any purpose other than to gain a shared understanding of where we are selecting improvement items based on the team’s stated goals.

However, if you choose to complete the assessment as a team and decide on each answer together, answering anonymously isn’t possible. Choose the option you think works best for your team’s culture.

After facilitating the team and technical agility assessment

The main point of running the team and technical agility assessment is to get the information it provides. What you do with this information determines its impact on your team.

What to do with the assessment results

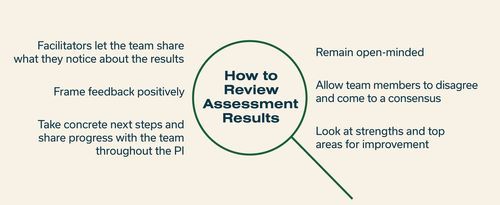

Once you’ve completed the assessment using one of the two approaches,

- Review sections individually

- Show aggregate results

- Allow team to notice top strengths and areas for improvement

- Don’t tell the team what you think as facilitator

We learned in the assessment how much we disagreed on some items. For example, even with a statement as simple as “Teams execute standard iteration events,” some team members scored us a five (out of five) while others scored us a one.

We treated every score as valid and sought to understand why some team members scored high and others low, just like we do when estimating the size of a user story.

This discussion lead to:

- Knowing where to improve

- Uncovering different perspectives

- Showing how we were doing as a team

- Prompting rich conversations

- Encouraging meaningful progress

We know it can be challenging to give and receive feedback, especially when the feedback focuses on improving. Here are a few ways to make conversations about the assessment results productive with your team.

Using the assessment to improve

With your assessment results in hand, it’s time to take actions that help you improve.

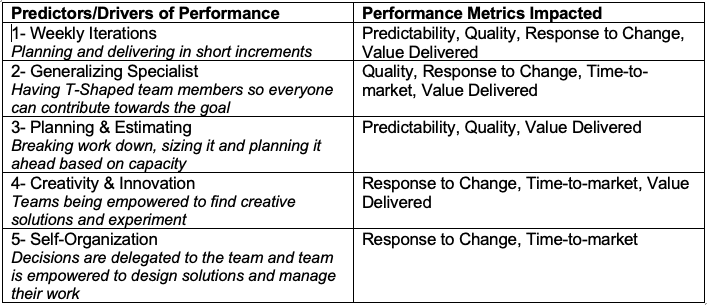

For each dimension of the team and technical agility assessment, SAFe provides growth recommendations to help teams focus on the areas that matter most and prioritize their next steps.

Growth recommendations are helpful because they’re bite-sized actions to break down the overall area of improvement. They’re easy to fit into the PI without overloading capacity.

Examples of growth recommendations:

Example 1:

- As a SM/TC, watch the How to Run an Effective Backlog Refinement Workshop video with the team.

- Discuss the importance of refining the backlog to ensure upcoming work is well-defined and there is no work outside the backlog.

- Schedule backlog refinement on a cadence.

Example 2:

- As a team, use the Identifying Key Stakeholders Collaborate template and answer the following questions:

- Who is the customer of our work? (This could be internal or external customers.)

- Who is affected by our work?

- Who provides key inputs or influences the goals of our work?

- Whose feedback do we need to progress the work?

- Maintain a list of key stakeholders.

Example 3:

- As a team, collect metrics to understand the current situation. Include the total number of tests, the frequency each test is run, test coverage, the time required to build the Solution and execute the tests, the percentage of automated tests, and the number of defects. Additionally, quantify the manual testing effort each Iteration and during a significant new release.

- Present and discuss these metrics with the key stakeholders, highlighting how the lack of automation impacts quality and time to market.

- Create a plan for increasing the amount of test automation.

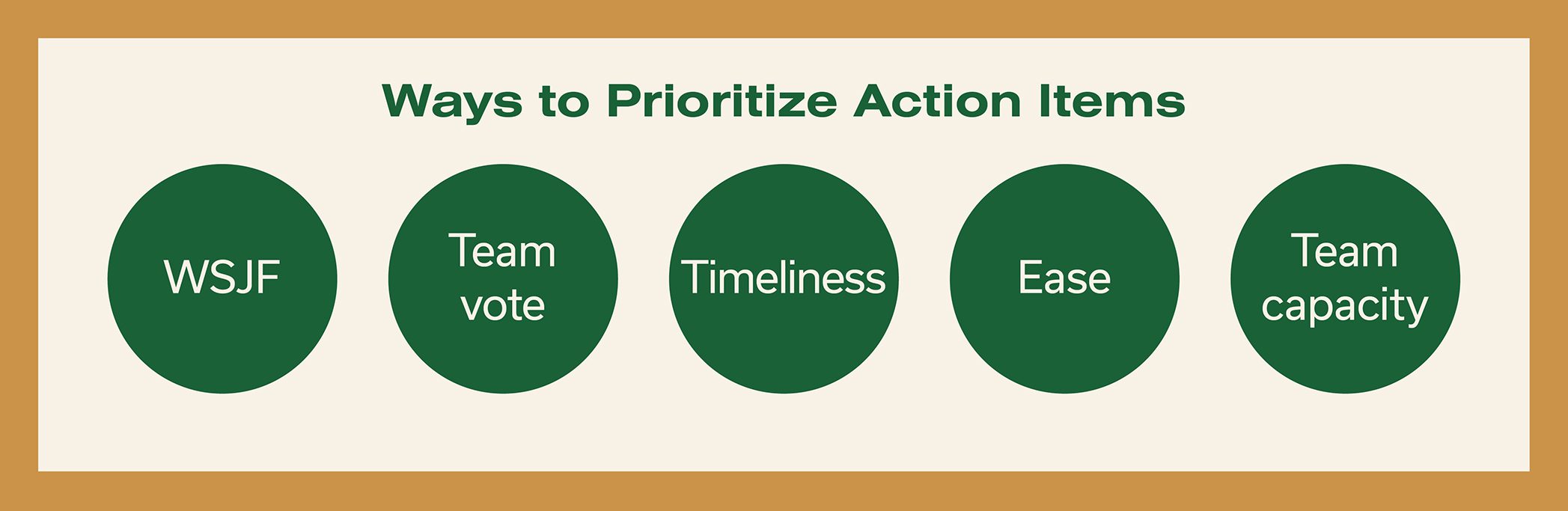

Here are some actions you should take once you’ve completed the assessment:

- Review the team growth recommendations together to generate ideas

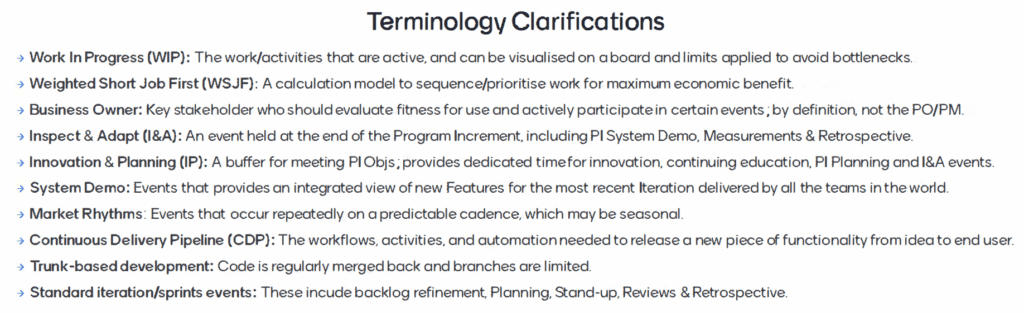

- Select your preferred actions (you can use dot voting or WSJF calculations for this; SAFe® Studio has ready-made templates you can use)

- Capture your team’s next steps in writing: “Our team decided to do X, Y, and Z.”

- Follow through on your actions so that you’re connecting them to the desired outcome

- Review your progress at the beginning of iteration retrospectives

Finally, you’ll want to use these actions to set a focus for the team throughout the PI. Then check in with Business Owners at PI planning on how these improvements have helped the organization progress toward its goals.

Tip: Simultaneously addressing all focus areas may be tempting, but you want to limit your WIP.

To do this, pick one focus area based on the results. You can add the remaining focus areas to the backlog to begin working on once you’ve addressed the first one.

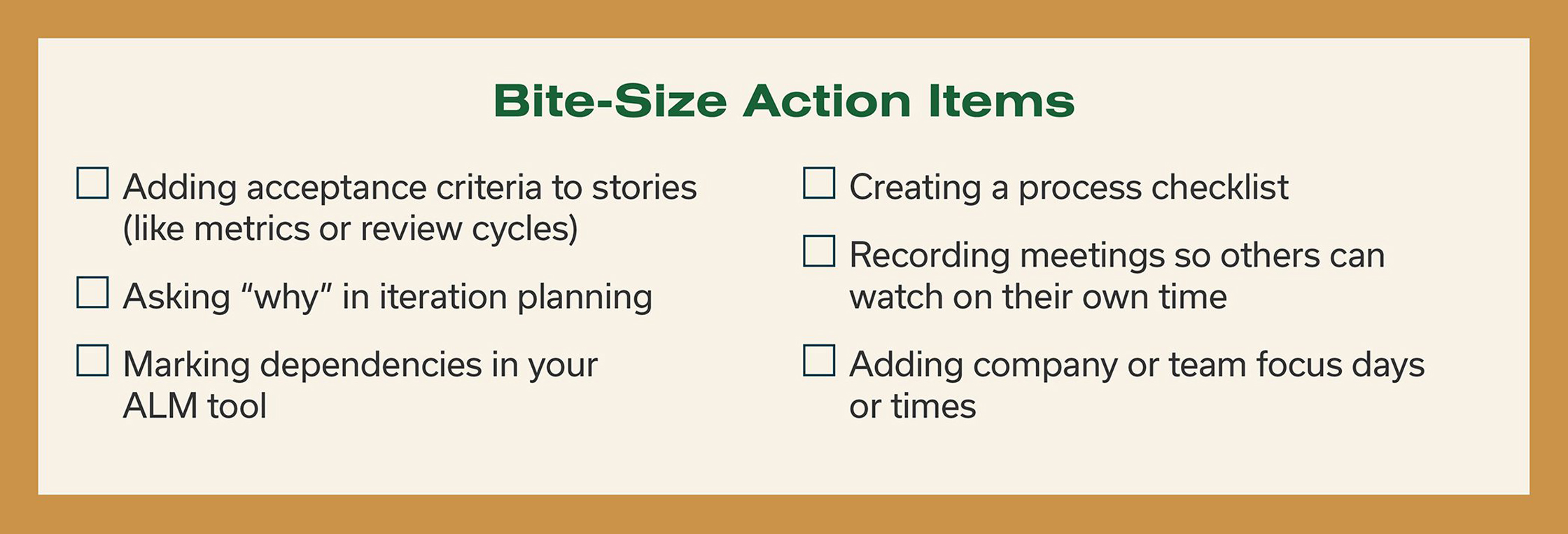

Feeling overwhelmed by the action items for your team? Try breaking them down into bite-size tasks to make it easier on capacity while still making progress.

These are some examples.

Team and Technical Agility Assessment Resources

Here are some additional resources to consider when assessing your team’s agility.

- Measure and Grow FAQs

- Measure and Grow forum

- Measure What Matters for Business Agility video

- Measure and Grow page in SAFe® Studio (My SAFe Assessments from the navigation bar)

- How Do We Measure Feelings? blog post

- Eight Patterns to Set Up Your Measure and Grow Program for Success blog post

- Measuring Agile Maturity with Assessments blog post

About the authors

Lieschen Gargano is a Release Train Engineer and conflict guru, thanks in part to her master’s degree in conflict resolution. As the RTE for the development value stream at Scaled Agile, Inc., Lieschen loves cultivating new ideas and approaches to Agile to keep things fresh and engaging. She also has a passion for developing practices for happy teams of teams across the full business value stream.

Sam is a certified SAFe® 6 Practice Consultant (SPC) and serves as the SM/TC for several teams at Scaled Agile. His recent career highlights include entertaining the crowd as the co-host of the 2019, 2020, and 2021 Global SAFe® Summits. A native of Columbia, South Carolina, Sam lives in Kailua, Hawaii, where he enjoys CrossFit and Olympic weightlifting.